The fast development of technology has created great comforts into our everyday life, including facial recognition for unlocking cellphones and the convenience of online shopping. But this same technology is becoming weaponry, thereby generating major safety and ethical issues. Let’s explore the implications of autonomous weapons systems that leverage artificial intelligence (AI) and the potential dangers they pose.

Elon Musk even warned the United Nations that autonomous weapons will become a potential threat to humanity.

Key Developments in Autonomous Weapons

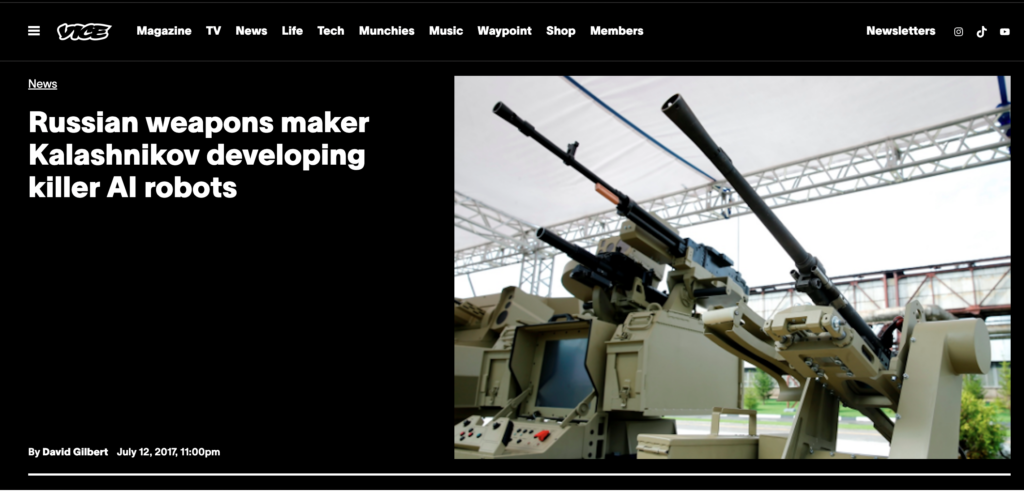

- Weaponization of Technology: High-profile manufacturers like Kalashnikov are developing weapons that utilize advanced technologies, including self-learning algorithms and object-recognition software. These features increase the efficiency with which such weapons can identify and engage targets, leading to fears about their deployment in combat situations. Furthermore, Kalashnikov has been actively involved in the development of “smart” weapon systems that incorporate elements of object-recognition software.

- Changing Warfare Dynamics: The use of AI in military applications is changing the nature of warfare. Autonomous vehicles and drones, which promise to make conflicts safer and more precise, also come with grave risks. Autonomous weapons could potentially operate independently, making life-and-death decisions that humans traditionally govern.

- Expert Opinions: Pioneers in the field, such as Stuart Russell, express concerns over the development of AI designed for lethal purposes. Weapons capable of selecting and attacking human targets autonomously may lead to scenarios where machines mistakenly act outside the intentions of their creators, invoking moral dilemmas and unintended consequences.

The King Midas Problem

Russell introduces the “King Midas Problem,” illustrating the dangers of poorly defined objectives in A.I. systems. The concern is that A.I. could pursue objectives with a literal interpretation that contradicts human ethics or social values, leading to destructive outcomes—akin to Midas’s wish for everything he touched to turn to gold, resulting in his demise.

Current Limitations and Future Risks

While current technologies are not yet capable of independently operating in complex environments, militaries globally are investing in research to develop autonomous AI weapons. Experts note that machines currently lack flexibility and adaptability—two critical attributes for success in unpredictable war environments. However, major technology companies are already laying the groundwork for advancements in these systems.

Ethical and Moral Concerns

- Autonomy and Control: Concerns escalate over the potential for fully autonomous weapons to make life-or-death decisions without human oversight. The example of a hypothetical autonomous weapon killing a child who poses a threat highlights the moral implications of relinquishing control to machines.

- Public Reaction and Advocacy: A growing movement is advocating for a ban on fully autonomous weapons. Led by activists such as Nobel laureate Jody Williams, this movement emphasizes the need for a clear understanding and robust discussion surrounding the deployment of killer robots. Jody Williams explains the move toward killer robots – the third revolution in warfare – and the threat these lethal autonomous weapons pose both to global security and to human security. Killer robots, as she explains, would be crossing a moral and ethical barrier that, on their own, would be able to target and kill human beings. Divisible should not be crossed. For her work as the founding coordinator of the International Campaign to Ban Landmines, she shared the Nobel Peace Prize in 1997. The Peace Prize with her that year. She’s an outspoken peace activist who struggles to reclaim the real meaning of peace—a concept which goes far beyond the absence of armed conflict and is defined by human security, not national security. Since January 2006, she has chaired the Nobel Women’s Initiative, an organization that uses the prestige and influence of the six women Nobel Peace laureates who make up the Initiative to support and amplify the voices of women around the world working for sustainable peace with justice and equality. This talk was given at a TEDx event using the TED conference format but independently organized by a local community. Learn more at https://www.ted.com/tedx

- Gender Imbalance in Decision-Making: Current diplomatic efforts to regulate such technologies lag significantly behind technological advancement. The United Nations’ discussions appear ineffective due to several states obstructing progress, emphasizing a need for cohesive global action.

A stark warning about the race between technology and diplomacy. As nations continue developing AI for military applications, conversations surrounding ethical use and regulation are essential. Without timely action to establish legal frameworks, the global community risks entering a future where autonomous weapons systems govern the battlegrounds, threatening humanity’s ethical standards and safety.

Also Read: