The Psychological and Legal Impact of Facial Recognition Errors in Retail

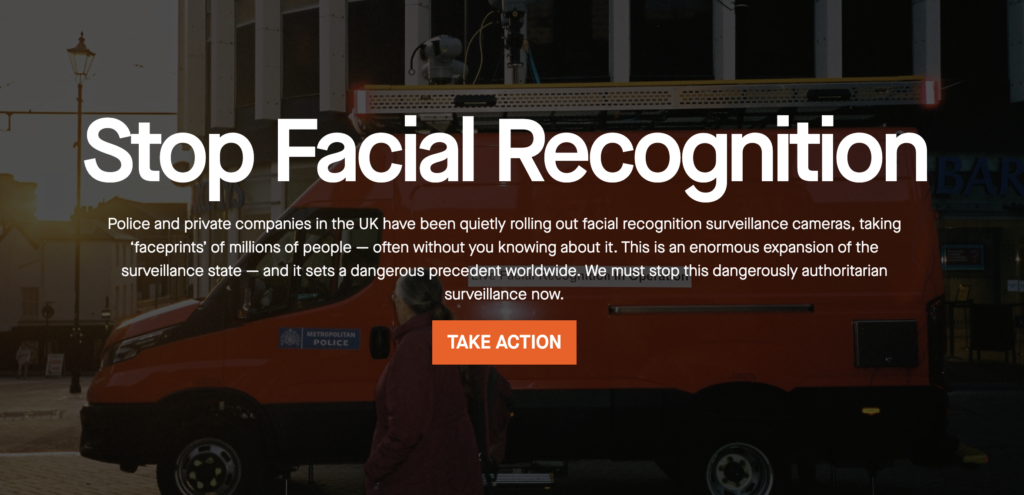

The integration of biometric surveillance into the retail environment has fundamentally altered the relationship between consumers and commercial spaces. While retailers argue these systems are essential for loss prevention, the lived experience of misidentified individuals reveals a profound psychological toll, often described as feeling like a “criminal” or being subjected to a “trial” without due process.

The Case of Warren Rajah: A “Dystopian Nightmare”

On January 27, 2026, Warren Rajah, a 42-year-old data strategist, was shopping at a Sainsbury’s branch in Elephant and Castle, London, when he was approached by three staff members and a security guard. Despite being a loyal customer for over a decade, Warren was escorted from the premises and his shopping was confiscated.

The incident was triggered by an alert from Facewatch, a cloud-based facial recognition system. Although Sainsbury’s and Facewatch later claimed the technology had a “99.98% accuracy rate,” they admitted that “human error” led staff to approach the wrong person. Warren described the experience as “Orwellian” and “borderline fascistic,” noting that he felt as if the supermarket staff acted as his “judge, jury, and executioner.”

Psychological and Sociological Implications of Biometric Errors

The feeling of being “branded a criminal” is a common theme among those misidentified by AI surveillance. Academic and sociological studies on surveillance emphasize that such technologies do not merely “watch” but actively “categorize” individuals, often leading to “social sorting.”

- Presumption of Guilt: Unlike traditional policing, where a suspect is innocent until proven guilty, biometric alerts in retail often reverse the burden of proof. Victims like Warren Rajah are forced to provide sensitive documents, such as passport copies, to private companies to “clear their name.”

- Public Humiliation: The act of being escorted out of a public space in front of one’s community causes significant distress. Warren noted that for vulnerable individuals, such an ordeal could be “mentally debilitating.”

- The “Black Box” Problem: Victims often face a lack of transparency. When Warren asked for an explanation, he was simply pointed to a sign or given a QR code, highlighting the “accountability vacuum” in automated security.

Broader Patterns of Misidentification

The experience of feeling like a criminal due to facial recognition errors is not an isolated incident. Several other shoppers in the UK have reported similar traumas:

- Byron Long (B&M, Cardiff): A 66-year-old man was falsely accused of stealing cat treats. He described the experience as having a “serious impact” on his mental health, leading to chronic anxiety while shopping.

- Anonymous Shopper (Home Bargains): A woman was wrongly flagged, had her bag searched, and was told she was banned from all stores using the technology. She reported “crying and crying the entire journey home,” fearing her life would never be the same.

- Danielle Horan (Manchester): Mistakenly identified as a thief and ordered out of two separate shops, illustrating how a single error in a shared database can lead to systemic exclusion.

- Sara Horan: While shopping at Home Bargains, Horan was flagged by the “Facewatch” system as a shoplifter. She was publicly confronted and ejected from multiple stores. It was later revealed that a store had incorrectly entered her data into the system following a legitimate purchase.

Documented Cases of Wrongful Identification and Arrest

The human cost of these algorithmic failures is evidenced by a growing list of individuals who have been wrongly branded as criminals or incarcerated due to “false positives.”

United States

- Nijeer Parks (New Jersey): In 2019, Parks was falsely accused of shoplifting and aggravated assault against a police officer in Woodbridge, N.J. Despite being 30 miles away at the time, he was identified via FRT. He spent 10 days in jail and faced a potential 10-year sentence before the case was dismissed for lack of evidence.

- Robert Williams (Michigan): Williams was the first known Black man in the U.S. to be wrongfully arrested due to a facial recognition error. He was arrested in his driveway in front of his family for a shoplifting incident at a Shinola store in Detroit that he did not commit.

- Michael Oliver (Michigan): Oliver was wrongfully accused of a felony larceny in Detroit after an FRT match mistakenly identified him as a suspect who had grabbed a teacher’s phone. The case was eventually dismissed when it was proven he was not the individual in the video.

- Porcha Woodruff (Michigan): Woodruff was eight months pregnant when she was arrested by Detroit police for carjacking and robbery based on an automated facial recognition match. The charges were later dropped.

- Harvey Murphy (Texas): Murphy was wrongly accused of an armed robbery at a Sunglass Hut. He spent time in jail and was reportedly assaulted while incarcerated before it was proven he was in another state during the crime.

Facial Recognition Misuse and Human Rights Concerns

The application of FRT extends far beyond the United States, often intersecting with political suppression and the erosion of anonymity in public spaces.

- France (The “Mr. H” Case): Following a 2019 burglary of a logistics company, French police used FRT on security footage, which generated a list of 200 potential suspects. “Mr. H” was singled out and sentenced to 18 months in prison despite a lack of physical evidence. The court notably refused to disclose the technical parameters of how the FRT system compiled the suspect list.

- The Netherlands: Football clubs have reportedly used FRT to identify fans banned from stadiums. In one instance, a supporter was wrongly fined for a match they did not attend due to a false positive match.

- United Kingdom: The South Wales Police and London’s Metropolitan Police have faced legal challenges regarding the use of live FRT. In the landmark case Bridges v South Wales Police, the Court of Appeal ruled that the police’s use of the technology was unlawful because there was too much discretion left to individual officers and insufficient assessment of the software’s racial and gender bias.

- China: Authoritative nonfiction accounts of the “surveillance state” in China detail the use of FRT to track the Uyghur Muslim minority in Xinjiang. The technology is used not just for crime prevention but for “predictive policing” and racial profiling, creating a digital “open-air prison.”

- Argentina: In Buenos Aires, the “Sistema de Reconocimiento Facial de Prófugos” (Facial Recognition System for Fugitives) was suspended by a judge in 2022 after it was discovered the system was being used to access the biometric data of political figures, activists, and journalists who were not on any fugitive list.

Legal and Ethical Concerns

Civil liberties groups, such as Big Brother Watch, argue that these systems turn shoppers into “walking barcodes.” From a legal perspective, the use of Live Facial Recognition (LFR) by private entities raises significant concerns regarding the UK General Data Protection Regulation (GDPR) and the Data Protection Act 2018.

The Information Commissioner’s Office (ICO) has emphasized that while facial recognition can assist in crime prevention, retailers must have “robust procedures” to ensure accuracy, especially when the impact on an individual is as severe as being falsely accused of a crime.

Ref:

- https://www.asiantrader.biz/sainsburys-facewatch-wrongful-accusation

- https://www.irvinetimes.com/news/national/25830692.sainsburys-shopper-wrongly-misidentified-staff-says-felt-like-criminal/

- https://evrimagaci.org/gpt/sainsburys-apologizes-after-facial-recognition-error-sparks-outcry-527064

- https://www.nbcnews.com/business/business-news/rite-aid-punished-facial-recognition-accuse-customers-shoplifting-rcna130587

- https://edri.org/our-work/retrospective-facial-recognition-surveillance-conceals-human-rights-abuses-in-plain-sight/

- https://www.marketplace.org/episode/how-facial-recognition-technology-can-lead-to-wrongful-arrests

- https://www.hrw.org/news/2023/05/04/china-phone-search-program-tramples-uyghur-rights

- https://www.fanseurope.org/news/football-fans-are-being-targeted-by-biometric-mass-surveillance/

- https://www.insideprivacy.com/data-privacy/uk-court-upholds-police-use-of-automated-facial-recognition-technology/

- https://www.aiaaic.org/aiaaic-repository/ai-algorithmic-and-automation-incidents/buenos-aires-sistema-de-reconocimiento-facial-de-pr%C3%B3fugos

- https://www.breitbart.com/tech/2025/06/16/guilt-by-algorithm-woman-wrongly-accused-of-shoplifting-due-to-facial-recognition-error/

Also Read: