The dawn of the artificial intelligence era presents humanity with unprecedented opportunities – and profound risks. Among the most critical challenges is the rapid development of Autonomous Weapons Systems (AWS), often chillingly referred to as “slaughterbots” or “killer robots.” These systems, leveraging advanced AI, are designed to identify, target, and lethal force against human beings without human intervention. This capability raises fundamental ethical, legal, and security questions that demand an immediate and robust international response.

The Future of Life Institute, alongside a growing global coalition, emphasizes the critical need for a legally binding treaty to govern the use and proliferation of these machines. The time to act is now.

Defining the Threat: What Are Autonomous Weapons Systems?

Autonomous Weapons Systems (AWS) are weapon systems equipped with artificial intelligence that grant them the ability to select and engage targets independently, without direct human control.

- Beyond Human Oversight: Unlike traditional drones, where a human operator maintains the crucial “human-in-the-loop” decision-making for lethal action, AWS relies solely on sophisticated algorithms. These algorithms execute deadly actions based on pre-programmed parameters and real-time sensory data, such as facial recognition and target profiling.

- The Algorithm as Assailant: The distinction is critical: in an autonomous system, the machine, not a human, makes the ultimate decision to take a human life.

UN and ICRC Joint Call on Autonomous Weapons

In a recent imperative announcement, UN Secretary-General António Guterres and International Committee of the Red Cross (ICRC) President Mirjana Spoljaric have collectively urged nations to implement new international regulations concerning autonomous weapon systems (AWS). The joint appeal highlights the urgent humanitarian need to create prohibitions and restrictions regarding these advanced military technologies in light of their potential consequences for humanity.

Ref:

Why Regulation is Crucial: Major Concerns

The unchecked proliferation and use of AWS pose severe threats to global security and human morality.

- Moral & Ethical Implications

Algorithms, by their very nature, lack the capacity for empathy, judgment, or an understanding of the fundamental value of human life. Entrusting machines with life-and-death decisions crosses a profound moral line. As United Nations Secretary-General António Guterres has unequivocally stated, such systems are “politically unacceptable” and “morally repugnant.” The dehumanization of warfare inherent in AWS is a profound affront to human dignity. - Global Security Risks

The deployment of algorithms in military applications carries significant destabilizing risks. AWS could lead to:- Rapid Proliferation: The ease of replication and deployment of AI-driven systems could accelerate an arms race, making these weapons globally widespread.

- Unpredictable Actions: Algorithmic errors, unforeseen interactions, or adversarial hacking could lead to unintended escalation, miscalculation, and conflicts spiraling out of control.

- Weapons of Mass Destruction: The potential for AWS to be integrated with or become weapons of mass destruction, operating on scales and speeds beyond human comprehension, presents an existential threat.

- Accountability Vacuum

Assigning lethal force authority to machines creates a dangerous vacuum of responsibility. In scenarios where autonomous weapons cause unintended civilian casualties or commit violations of international law, profound questions arise:- Who is accountable? The programmer, the commander, the manufacturer, or the machine itself?

- The absence of clear accountability would undermine justice, foster impunity, and erode the very foundations of international humanitarian law.

The Global Call to Action: Current Landscape & Response

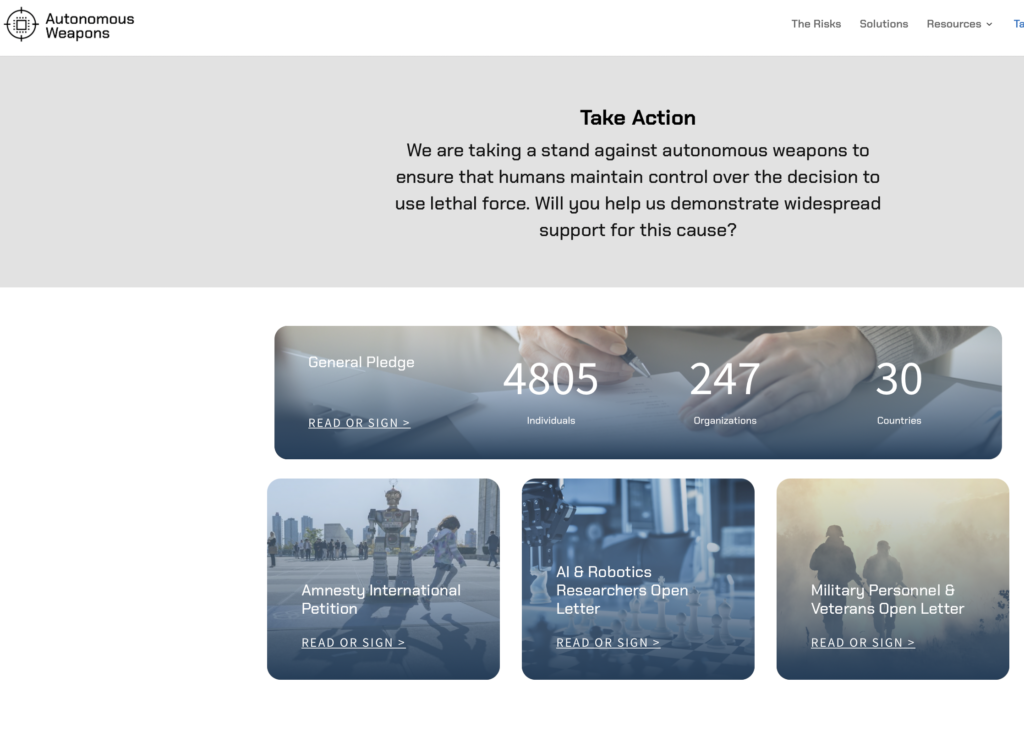

A powerful global consensus is emerging against the development and deployment of fully autonomous weapons.

- Growing Support for a Treaty: A significant number of UN member states—over 100 countries—advocate for a legally binding treaty to regulate autonomous weapons, signalling widespread international concern and a commitment to collective action.

- Beyond Traditional Frameworks: While discussions have been ongoing within the UN Convention on Certain Conventional Weapons (CCW) since 2013, initiatives are now emerging globally to establish treaties and frameworks outside traditional structures, reflecting the urgency of the matter.

Key Milestones in the AWS Debate

The timeline of AWS development and discussion highlights the escalating urgency of this issue:

- 2013: Initial conversations regarding the implications of autonomous weapons begin at the UN Convention on Certain Conventional Weapons (CCW), marking the formal start of international dialogue.

- 2021: Documented use of autonomous weapons systems in combat signals their alarming operational reality, moving the debate from theoretical to immediate.

- 2023: A critical resolution urging negotiations for comprehensive regulatory frameworks addressing AWS is proposed within the United Nations, reflecting intensified global efforts.

A Path Forward: Proposed Solutions for Ethical AI Military Use

The International Committee of the Red Cross (ICRC) has proposed a robust three-pillar framework to ensure ethical military use of AI and to mitigate the risks posed by AWS:

- Strict Prohibition on Human-Targeted AWS

A legally binding ban on autonomous weapons designed to specifically target human lives. This explicitly draws a moral line, ensuring machines are never given the ultimate decision over human existence. - Limit Unpredictability

Prohibit systems exhibiting high levels of unpredictability in their actions. This measure aims to prevent unintended consequences, escalation, and the creation of systems that operate beyond human comprehension or control. - Mandate Meaningful Human Control

All other autonomous systems must permit adequate and meaningful human oversight in their operational processes. This ensures that a human remains in a position to understand, judge, and intervene in the use of force.

The Urgent Fight to Stop Slaughterbots

The development and deployment of Autonomous Weapons Systems represent a defining moment for humanity. The potential global backlash from unregulated autonomous weapons is immense, threatening to destabilize international relations, undermine moral principles, and irrevocably alter the nature of warfare.

Governments must take proactive and decisive steps towards establishing robust international regulations, ensuring accountability, upholding ethical standards, and safeguarding human lives in military operations. The majority of UN states are in support of a legally binding treaty on autonomous weapons, aiming to prohibit the most unpredictable and dangerous systems and regulate those that can be used with meaningful human control.

As the capability and deployment of these technologies expand, timely action is not just critical—it is imperative to mitigate associated risks and ensure national and global security.

The question is no longer if we need regulation, but how swiftly we can achieve it.

Will your country support legally-binding rules towards autonomous weapons systems?

Take Action: https://autonomousweapons.org/take-action/ (copy and paste the link)

Also Read: