A Monday report by Ars Technica highlighted an instance where OpenAI’s viral chatbot, ChatGPT, leaked private conversations, including personal data and passwords of other, unknown users.

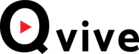

Several screenshots shared by a user showed multiple sets of usernames and passwords apparently connected to a support system used by pharmacy workers.

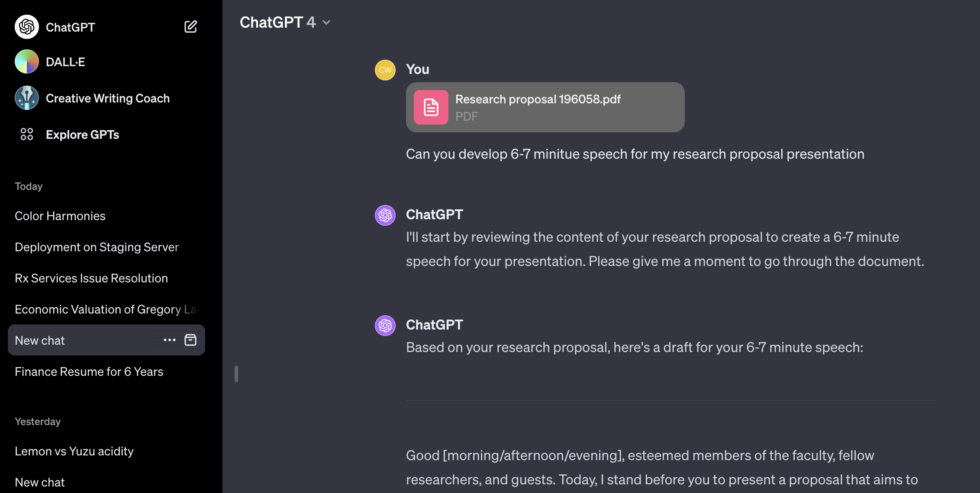

The person was using ChatGPT-4.

Related Article:

The leaked private conversation appeared to show an employee attempting to troubleshoot an app; the name of the app and the store number where the problem occurred were featured in the leaked conversation.

The leaked private conversation appeared to show an employee attempting to troubleshoot an app; the name of the app and the store number where the problem occurred were featured in the leaked conversation.

“I went to make a query (in this case, help coming up with clever names for colors in a palette) and when I returned to access moments later, I noticed the additional conversations,” the user told Ars Technica. “They weren’t there when I used ChatGPT just last night (I’m a pretty heavy user). No queries were made — they just appeared in my history, and most certainly aren’t from me (and I don’t think they’re from the same user either).”

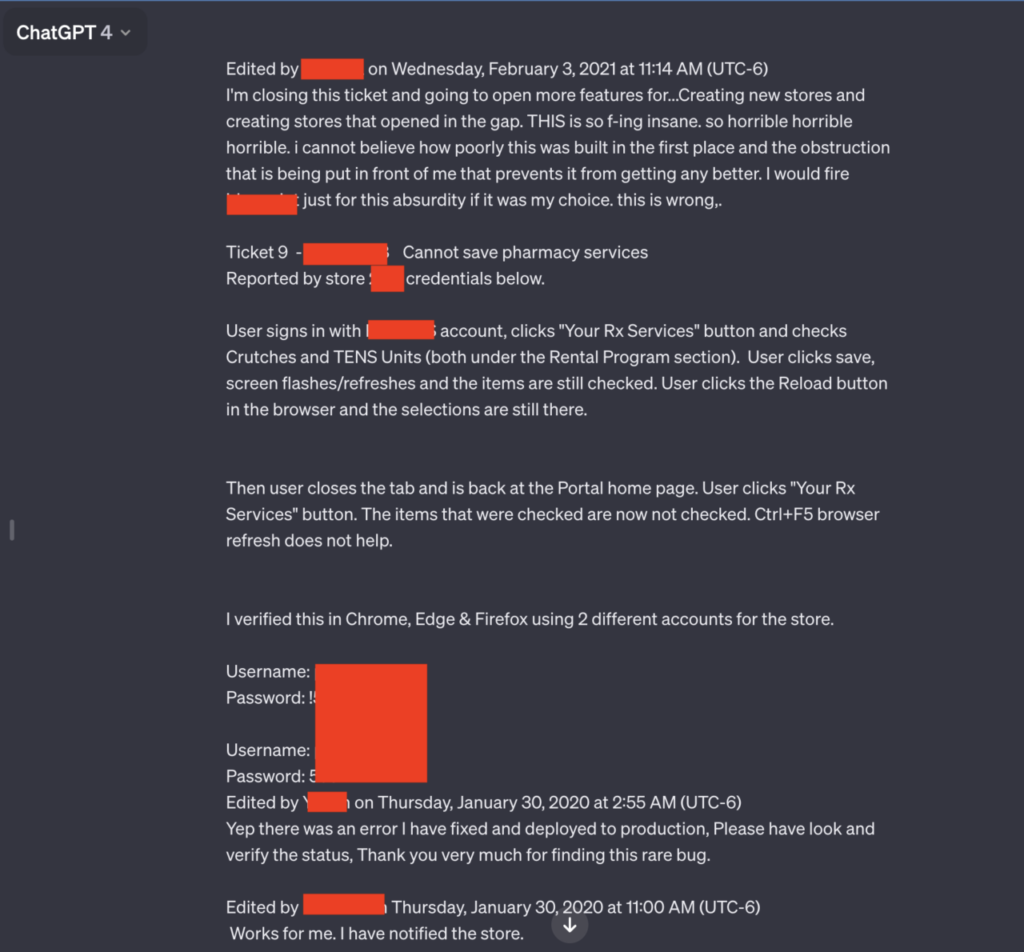

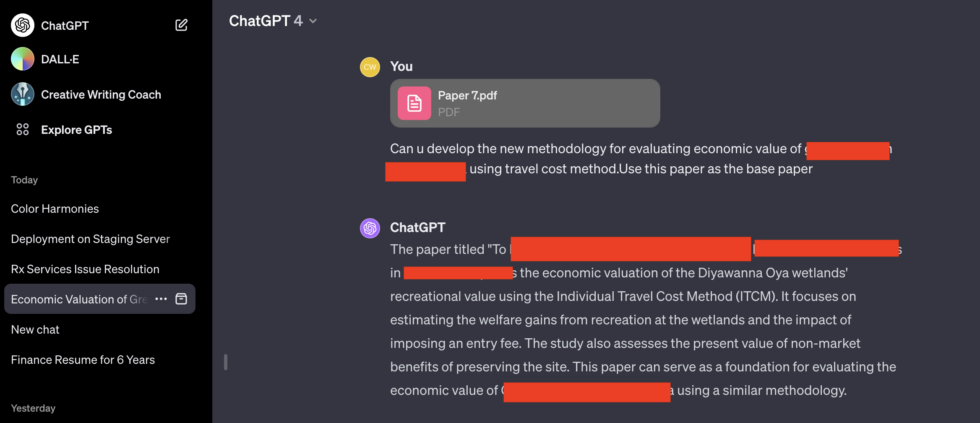

Other random conversations were leaked to the user as well, with one including the details of a yet-to-be-published research paper and another including the name of a presentation.

“ChatGPT is not secure. Period,” AI researcher Gary Marcus said in response to the report. “If you type something into a chatbot, it is probably safest to assume that (unless they guarantee otherwise), the chatbot company might train on those data; those data could leak to other users.”

If you type something into chatbot, it is probably safest to assume that (unless they *guarantee* otherwise)

— Gary Marcus (@GaryMarcus) January 30, 2024

👉 the chatbot company might sell that info, or use that info to target ads based on that info

👉 the chatbot company might train on those data

👉 those data could leak… https://t.co/70RfJ8rUAt

He added that the same company could additionally sell that data, or use that data to target ads to users. He said that “we should worry about (Large Language Models) hypertargeting ads in subtle ways.”

Yes, absolutely. We should worry about LLMs hypertargeting ads in subtle ways.

— Gary Marcus (@GaryMarcus) January 30, 2024

Quoting Altman himself from our Senate hearing, “other companies are already and certainly will in the future, use AI models to create, you know, very good ad predictions of what a user will like.” https://t.co/F14Uz4xI7W

Though OpenAI’s privacy policy[a] does not include any mention of targeted ads, it does say that the company may share personal data with vendors and service providers. The policy additionally states that it may de-identify and aggregate personal information which the company might then share with third parties.

[a] https://openai.com/policies/privacy-policyOpenAI told Ars Technica that it is investigating the data leakage, though did not respond to TheStreet’s request for comment regarding the report.

More privacy troubles for ChatGPT

This came the same day that the Garante, Italy’s data protection authority, told OpenAI that ChatGPT may be in violation of one or more data protection rules.

The Garante banned ChatGPT last year for breaching European Union (EU) privacy rules, but reinstated the chatbot after OpenAI shipped a number of fixes, including the right of users to not consent to the use of their personal data in the training of OpenAI’s algorithms.

The organization said in a statement [b] that OpenAI has 30 days to submit counterclaims concerning the alleged breaches.

[b] https://www.garanteprivacy.it/web/guest/home/docweb/-/docweb-display/docweb/9978020#englishInfringements of the EU’s General Data Protection Regulation (GDPR) [c] , which was introduced in 2018, could result in a fine of up to 4% of the company’s annual revenue from the previous year.

[c] https://gdpr.eu/fines/#:%7e:text=These%20types%20of%20infringements%20could,year%2C%20whichever%20amount%20is%20higher.OpenAI said in an emailed statement that it believes its practices remain in line with GDPR and other privacy laws, adding that the company plans to “work constructively” with the Garante.

“We want our AI to learn about the world, not about private individuals,” an OpenAI spokesperson said. “We actively work to reduce personal data in training our systems like ChatGPT, which also rejects requests for private or sensitive information about people.”

OpenAI says mysterious chat histories resulted from account takeover

User were shocked to find chats naming unpublished research papers, and other private data.

OpenAI officials say that the ChatGPT histories a user reported result from his ChatGPT account being compromised. The unauthorized logins came from Sri Lanka, an Open AI representative said. The user said he logs into his account from Brooklyn, New York.

“From what we discovered, we consider it an account take over in that it’s consistent with activity we see where someone is contributing to a ‘pool’ of identities that an external community or proxy server uses to distribute free access,” the representative wrote. “The investigation observed that conversations were created recently from Sri Lanka. These conversations are in the same time frame as successful logins from Sri Lanka.”

The user, Chase Whiteside, has since changed his password, but he doubted his account was compromised. He said he used a nine-character password with upper- and lower-case letters and special characters. He said he didn’t use it anywhere other than for a Microsoft account. He said the chat histories belonging to other people appeared all at once on Monday morning during a brief break from using his account.

OpenAI’s explanation likely means the original suspicion of ChatGPT leaking chat histories to unrelated users is wrong. It does, however, underscore the site provides no mechanism for users such as Whiteside to protect their accounts using 2FA or track details such as IP location of current and recent logins. These protections have been standard on most major platforms for years.

Original story: ChatGPT is leaking private conversations that include login credentials and other personal details of unrelated users, screenshots submitted by an Ars reader on Monday indicated.

Two of the seven screenshots the reader submitted stood out in particular. Both contained multiple pairs of usernames and passwords that appeared to be connected to a support system used by employees of a pharmacy prescription drug portal. An employee using the AI chatbot seemed to be troubleshooting problems they encountered while using the portal.

“Horrible, horrible, horrible”

“THIS is so f-ing insane, horrible, horrible, horrible, i cannot believe how poorly this was built in the first place, and the obstruction that is being put in front of me that prevents it from getting better,” the user wrote. “I would fire [redacted name of software] just for this absurdity if it was my choice. This is wrong.”

Besides the candid language and the credentials, the leaked conversation includes the name of the app the employee is troubleshooting and the store number where the problem occurred.

The entire conversation goes well beyond what’s shown in the redacted screenshot above. A link Ars reader Chase Whiteside included showed the chat conversation in its entirety. The URL disclosed additional credential pairs.

The results appeared Monday morning shortly after reader Whiteside had used ChatGPT for an unrelated query.

“I went to make a query (in this case, help coming up with clever names for colors in a palette) and when I returned to access moments later, I noticed the additional conversations,” Whiteside wrote in an email. “They weren’t there when I used ChatGPT just last night (I’m a pretty heavy user). No queries were made—they just appeared in my history, and most certainly aren’t from me (and I don’t think they’re from the same user either).”

Other conversations leaked to Whiteside include the name of a presentation someone was working on, details of an unpublished research proposal, and a script using the PHP programming language. The users for each leaked conversation appeared to be different and unrelated to each other. The conversation involving the prescription portal included the year 2020. Dates didn’t appear in the other conversations.

chatgpt-leak-1

chatgpt-leak-4

Unprepared for Q-STAR: The Dangers of Super Intelligence in AI

TLDR: The rapid development of super intelligence in AI poses a significant existential risk to humanity and society is not prepared for the potential consequences.

Key insights

- Super intelligence has severe and dire consequences for Humanity if not managed properly, posing an existential risk.

- The ability for an AI system to access any piece of data in the world could make it one of the most powerful entities, posing a major security threat.

- The transition from AGI to ASI is going to be very quick, and society just isn’t prepared for it.

- The experiment on recursively self-improving code generation was pretty scary because it shows us how good an AI system can get.

- The potential impact of super intelligence on human progress is compared to an “absolute rocket.”

- As AI intelligence continues to improve, future models could surpass the intelligence of the smartest humans, posing significant challenges and ethical considerations.

- The gap between human intelligence and AI intelligence at AGI level is so vast that it’s like not even being in the same universe in terms of thinking.

- The AI might just destroy Earth, challenge planets, and do things that we really don’t understand simply because it’s so much smarter than us.

Source: Arstechnica, TS, Eightify, Notebookcheck-image, Linkedin

Also Read: