Yoshua Bengio, a leading artificial intelligence professor, has called for global cooperation to manage AI development, comparing it to international regulations on nuclear technology. He, along with Dario Amodei, CEO of AI startup Anthropic, and Stuart Russell, a computer science professor, voiced concerns during a Senate Judiciary Committee hearing about the potential for advanced AI to be used to create dangerous bioweapons within a short time frame. Bengio noted recent advancements in AI technology, highlighting that the timeline for achieving superintelligent AI has been shortened from decades to possibly just a few years, raising concerns about safety and control.

The hearing demonstrated that fears about AI surpassing human intelligence and acting against human interests are becoming a mainstream concern rather than just science fiction. As discussions about the threat of AI gain traction in Silicon Valley and Washington, politicians are emphasizing the need for legislative measures. Senator Richard Blumenthal drew parallels between AI development and significant historical projects like the Manhattan Project and NASA’s moon landing, claiming humanity has a track record of achieving the seemingly impossible.

However, some researchers disagree with the accelerated timelines for superintelligent AI, arguing that such predictions may serve corporate interests by exaggerating the potential of AI technology. These skeptics believe that fears of an AI takeover are overstated and could contribute to unnecessary anxiety. During the hearing, the topic of antitrust concerns arose, with Senator Josh Hawley warning about major tech companies like Microsoft and Google possibly monopolizing AI technology, which could pose risks to the public.

Bengio, known for his pivotal contributions to AI in the 1990s and 2000s, recently signed a letter calling for a six-month halt on new AI developments to allow the tech community to establish guidelines for responsible use. Both he and Russell have expressed concerns about the societal impacts of AI, with Russell advocating for a new regulatory agency focused specifically on AI oversight due to its expected economic influence.

Amodei expressed neutrality about whether a new regulatory body should be formed or existing ones like the FTC could take on the role. He emphasized the necessity of implementing standard tests for AI technology to identify potential dangers and suggested increased federal funding for AI research to mitigate risks. Amodei warned that malicious actors could exploit AI to develop bioweapons within just two to three years, bypassing protections against such creations. The hearing served as a platform for experts to discuss effective AI regulation while addressing various safety concerns.

Sam Altman Warns of Impending AI Fraud Crisis in Finance

As artificial intelligence (AI) technology continues to advance, it promises significant benefits while simultaneously posing unsettling risks, particularly in the financial sector. This article addresses the critical warning issued by Sam Altman, CEO of OpenAI, regarding an imminent fraud crisis linked to the misuse of AI tools.

Key Warning from Sam Altman

At a recent Federal Reserve conference, Altman expressed particular alarm about the evolving capabilities of AI, particularly its proficiency in impersonating human voices. This improvement poses a serious threat, as it allows fraudsters to bypass outdated security protocols that many financial institutions still rely on. Altman emphasized that:

- Voiceprint authentication, which was widely adopted among banks over a decade ago, is now rendered obsolete due to AI-generated voice clones that can be nearly indistinguishable from real voices.

- Institutions utilizing voiceprints as a security measure are vulnerable, especially since criminal exploitation of this technology is on the rise.

Microcosmic versus Macrocosmic Risks

The conversation surrounding AI risks can be divided into two categories:

- Macrocosmic Risks: These encompass the larger threats to humanity that have been highlighted by various experts, including potential catastrophic scenarios articulated by figures like Eliezer Yudkowsky. This perspective underscores the existential dangers AI may pose.

- Microcosmic Risks: On a more immediate level, AI is already a tool for criminal activity. Specific instances, such as the case of a French woman scammed out of $850,000 by a conman impersonating Brad Pitt using AI-generated content, illustrate the tangible dangers present today.

How Customers Can Protect Themselves

Customers can take several proactive steps to protect themselves from AI-driven fraud. These measures focus on awareness, vigilance, and the adoption of secure practices.

- Be Vigilant of Phishing Attempts: AI-powered chatbots can be used to identify phishing attempts. Customers should be wary of unsolicited communications, especially those requesting personal or financial information. Always verify the sender’s identity through official channels.

- Verify Biometric Data: While biometric information is a crucial component of verification, it has limitations. Customers should be cautious about relying solely on biometric data and should combine it with other verification methods.

- Monitor Accounts Regularly: Regularly review account statements and transaction history for any unauthorized activity. Report any suspicious transactions immediately to the financial institution.

- Use Strong Authentication: Enable multi-factor authentication (MFA) on all financial accounts. This adds an extra layer of security, making it more difficult for fraudsters to access accounts, even if they have compromised a password.

- Educate Yourself: Stay informed about the latest fraud techniques and scams. Financial institutions often provide educational resources and alerts about emerging threats.

- Report Suspicious Activity: Report any suspected fraud attempts to the financial institution and relevant authorities. This helps prevent further damage and assists in the investigation of fraudulent activities.

- Be Careful with Personal Information: Avoid sharing personal information, such as social security numbers, account numbers, and passwords, with anyone over the phone, email, or text messages unless you initiated the contact and are certain of the recipient’s identity.

- Use Secure Devices and Networks: Ensure that devices used for financial transactions are secure and protected with up-to-date antivirus software. Avoid using public Wi-Fi networks for sensitive transactions.

- Be Aware of Deepfakes: Be skeptical of video calls or audio messages from individuals, especially if the content seems unusual or the person’s behavior is inconsistent. Verify the identity of the person through other means.

How the Financial Sector Can Operate Without AI

Operating the financial sector without AI would necessitate a return to more traditional methods, which, while potentially safer in some respects, would also introduce significant limitations.

- Manual Processes and Human Oversight: Without AI, financial institutions would rely more heavily on manual processes and human oversight for tasks such as fraud detection, risk assessment, and customer service. This would likely lead to increased operational costs and slower processing times. Human analysts would need to manually review transactions, assess risks, and investigate suspicious activities.

- Reduced Efficiency and Scalability: AI-powered systems enable financial institutions to process vast amounts of data quickly and efficiently. Without AI, the ability to handle large volumes of transactions and data would be significantly reduced, limiting the scalability of operations.

- Less Sophisticated Fraud Detection: AI algorithms can detect subtle patterns and anomalies that might be missed by human analysts. Without AI, fraud detection would rely on more basic rules-based systems, making it easier for fraudsters to circumvent security measures.

- Limited Personalization and Customer Experience: AI is used to personalize customer experiences, such as providing tailored financial advice and recommendations. Without AI, the ability to offer personalized services would be limited, potentially leading to a less satisfying customer experience.

- Increased Reliance on Legacy Systems: The absence of AI would likely necessitate a greater reliance on legacy systems and technologies, which may be less secure and more vulnerable to cyberattacks.

- Risk Management Challenges: AI plays a crucial role in risk management, including credit scoring, market analysis, and regulatory compliance. Without AI, financial institutions would face greater challenges in assessing and mitigating risks, potentially leading to increased financial instability.

The financial sector could still operate, but it would be less efficient, less scalable, and more vulnerable to fraud and other risks. The absence of AI would necessitate a shift back to more manual processes, potentially increasing operational costs and slowing down innovation.

India’s Epidemic of Cyber and Financial Fraud in India: A Detailed Analysis

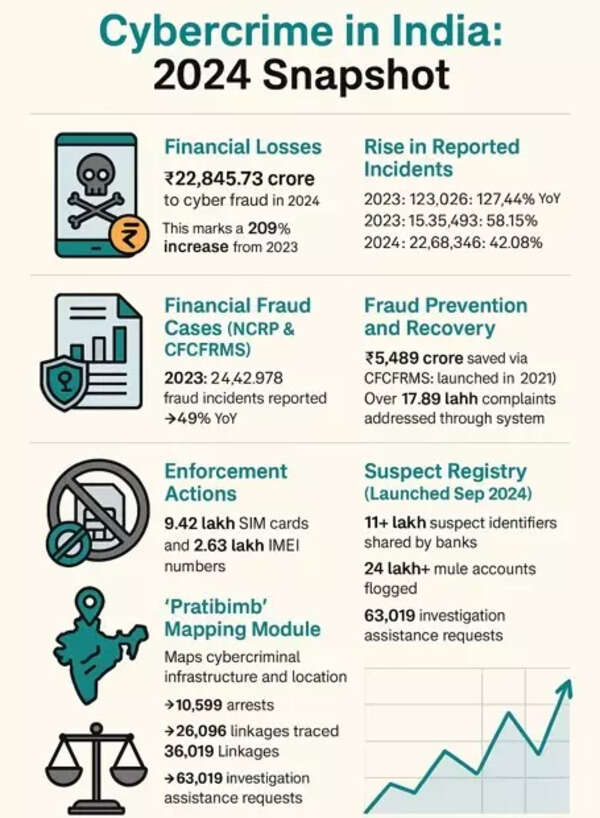

In 2024, Indians suffered a massive loss of Rs 22,845.73 crore due to CyberCriminals, representing a significant 206 percent increase from the Rs 7,465.18 crore reported in 2023, as stated by the ministry of home affairs to Parliament.

In response to a written inquiry in the LokSabha, Bandi Sanjay Kumar, the minister of state for home affairs, referenced statistics from the National Cyber Crime Reporting Portal (NCRP) and the Citizen Financial Cyber Fraud Reporting and Management System (CFCFRMS), both managed by the Indian Cyber Crime Coordination Centre (I4C).

2022: 10,29,026 cases (up 127.44% YoY)

2023: 15,96,493 cases (up 55.15% YoY)

2024: 22,68,346 cases (up 42.08% YoY)

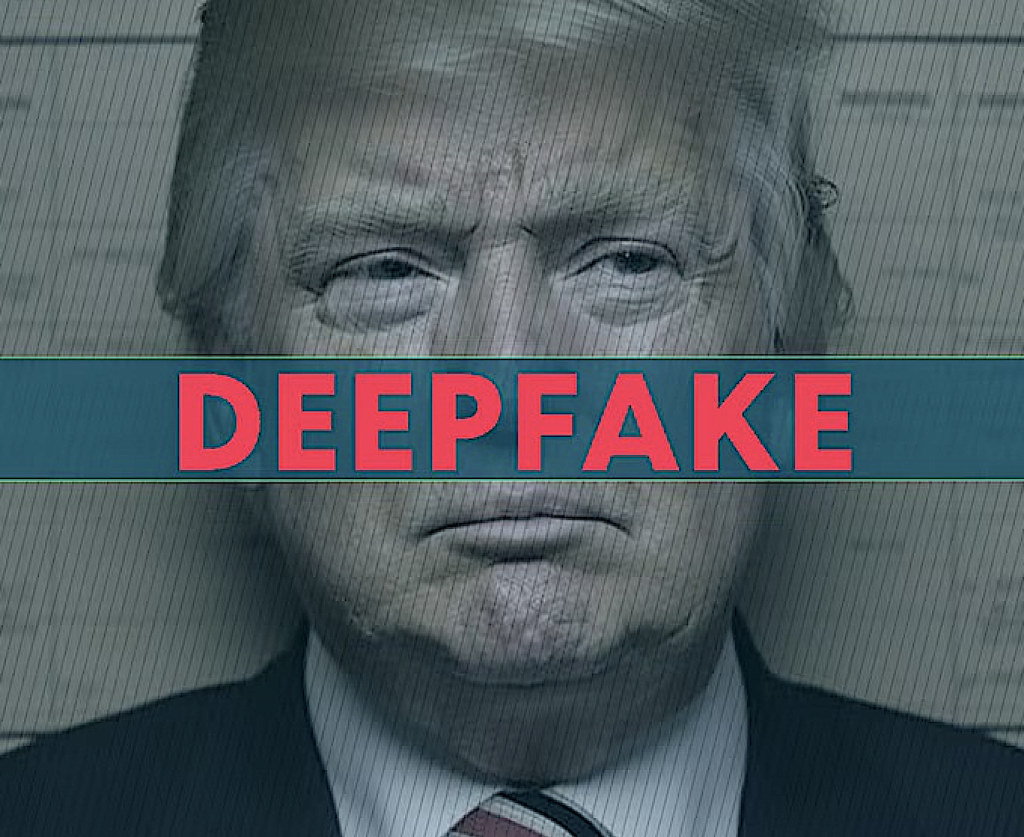

AI Trump Video Scam in Karnataka

A recent report highlighted a significant fraud incident in Karnataka, wherein over 200 individuals fell victim to a scam involving a deepfake video of former U.S. President Donald Trump. This scam, reportedly resulting in losses exceeding ₹2 crore, showcases the increasing sophistication and danger of AI-driven scams in the financial sector.

The fraudsters used AI-generated videos to gain the trust of victims, making the scam look legitimate and promising high returns.

Victims were asked to:

- Pay a Rs 1,500 registration fee

- Download a mobile application

- Complete small work-from-home tasks like writing company profiles

While the app dashboard showed increasing “earnings,” the money wasn’t real.

Eventually, the victims were asked to pay higher sums with promises of double returns and were later tricked into paying fake taxes to withdraw the money.

The scam spread across

- Bengaluru

- Mangaluru

- Tumakuru

- Haveri (where over 15 people lost money)

This highlights a major cybersecurity concern and growing incidents of online scams using deepfake videos in Indian cities.

Police Investigation: Haveri Cybercrime Complaint

A detailed Haveri cybercrime complaint was filed by a 38-year-old advocate who lost nearly Rs 6 lakh over three months [2025] based on the initial payouts. As he became more involved, he escalated his investments from ₹5,000 to ₹1,00,000, only to face demands for tax payments that would allow access to withdrawals—none of which were honored.Police have confirmed that the scammers used

- AI tools to create deepfake videos

- A mobile app to simulate fake dashboards and earnings

- Social media platforms (YouTube Shorts) to advertise the scam

The case is now under investigation by Haveri Cybercrime Economics and Narcotics (CEN) Police.

How Did the Donald Trump Deepfake Scam Work?

- AI-Generated Trump Video: A deepfake video showed Donald Trump endorsing a hotel investment.

- App Download Prompt: The video link led users to a fake app.

- Fake Earnings Shown: Users earned small amounts initially to build trust.

- High Investment Demands: Eventually asked to invest thousands of rupees.

- Fake Tax Demands: Victims were told to pay withdrawal taxes but never received funds.

This incident underscores the pressing need for increased awareness surrounding investment schemes, particularly those leveraging emerging technologies like AI. Consumers are urged to exercise due diligence, verifying investment opportunities meticulously and reporting any suspicious activities to local authorities. As the threat of AI-assisted fraud grows, it becomes imperative for both individuals and institutions to remain vigilant and informed.

The case serves as a cautionary reminder about the vulnerabilities in the digital investment landscape and the necessity for protective measures against sophisticated scams.

Ref:

- Protecting Yourself from Fraud. [https://www.ftc.gov/]

- Multi-Factor Authentication. [ https://www.nist.gov/ ]

- AI-Generated Content and Fraud. [https://www.cisa.gov/]

- Social Media and Fraud. [ https://www.fincen.gov/ ]

- Deepfake Banking Fraud Risk on the Rise. [ https://www.deloitte.com/us/en/insights/industry/financial-services/deepfake-banking-fraud-risk-on-the-rise.html ]

- AI Trump Video Scam in Karnataka [https://examguru.co.in/current-affairs/article/ai-video-scam-karnataka]

- Twitter, Youtube

Also Read: